Disc Drive Caching: Just This Side of Magic

- Craig Prall's Blog

- Log in to post comments

Because of built-in caches on hard disk drives and solid-state drives, drive speed tests sometimes make it appear that disks write faster than they read. This entry goes into the type of behavior I see the drives on my desktop system. (Originally, an answer I posted on Quora.com.)

When writing a batch of small files to a drive and then reading the same files back from the drive (using copy or move that is native to the OS), the transfer rate numbers you see can be misleading. In some cases, the write speed seems faster than the read read speed. This is likely because the drive has write caching turned on and the files being written and read back either mostly or wholly fit within the drive’s cache. In this blog entry, I'm going to illustrate the effect of drive caching based on a small subset of drives: the ones in my gaming desktop.

Most if not all drives have some amount of high-speed cache memory that they use to hold blocks of data that need to be written. If the drives you are testing have something like a 32MB or 64MB cache and the files you are writing are less than those numbers, they will be first written to the cache and the write operation has completed as far as the operating system is concerned. The drive takes care of saving the data in its write cache to the actual drive storage a bit later.

As you might have guessed, this works great so long as the power is on. If the power to the drive goes out before the actual writes have finished (also known as before the cache has been flushed), data could be lost. It’s not just rotating hard disk drives (HDDs) that have caches; most SSD drives also use caches, but their caches are sometimes different from HDD caches. Instead of using volatile RAM that requires power to work, SSDs can use a faster version of the same flash memory that the drive uses for storing data. Also, some SSDs are manufactured with enough capacitors on them that they have time to flush their write cache if they sense the power has gone out. (Capacitors act as tiny batteries in this application - for a short while anyway.)

Most drive testing software will either disable write caching or randomize data (so there are no cache “hits” [i.e., matching data in cache]) and write out enough data such that the cache couldn't contain all of it. I like to use Crystal Disk Mark for HDDs. It’s small portable and simple. For SSDs, I often use AS SSD Benchmark. (You may have to search around for it. Here is the official website [in Germany].)

At a certain size of a file and above, some SSDs compress the data before they store it. This serves at least two purposes: less data is written, so the write is faster and since less data is written, the SSD write lifecycle wear is less. For this post though, I am going to use a good benchmark for testing both SSDs and HDDs, which is ATTO Disk Benchmark. (Download from here if you don’t want to fill out the form.) I like the way it graphs as the results - making it easy to see write and read speeds side-by-side for various block sizes.

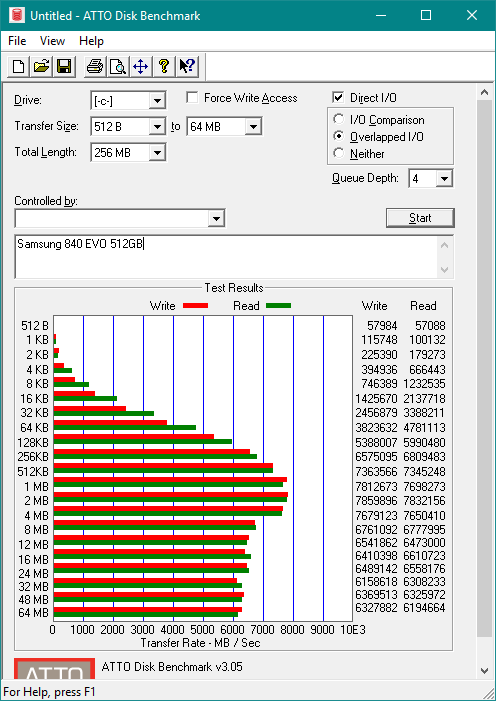

Using ATTO Disk Benchmark on an SSD, I get the following:

This is from a Samsung 840 EVO 512 GB SSD drive. As you can see, writes start out being faster than reads for very small files. I believe this is because of the write cache the 840 EVO has. At around 4KB size writes, reads are faster than writes. In disk benchmarks that you might see in magazines, the 4KB numbers are often shown as the beginning result. I believe that’s because that’s where most disk caches are taken out of the equation.

However, if you look at the graph above, you’ll notice that at 512KB-sized writes and above, write speeds are again faster than read speeds. I think that is where the 840 EVO starts to compress the blocks before writing them, which gives the appearance that writes are faster than reads. On the read operation, the block must be decompressed into its original form and then delivered to the application (in this case, the disk testing application). Above that size, there are occasional sizes where reading tests faster than writing, but on the whole writing appears faster. At least, the drive is very fast at compressing the block and storing it (with potentially some involvement from the cache). The shape of the test output is a bell curve. I think that is because that at the 2MB or 4MB block size, the advantage of compressing the data is maxed out. (Perhaps the buffer to the compression algorithm is 2MB or 4MB. Any blocks bigger than that take two or more compression cycles.)

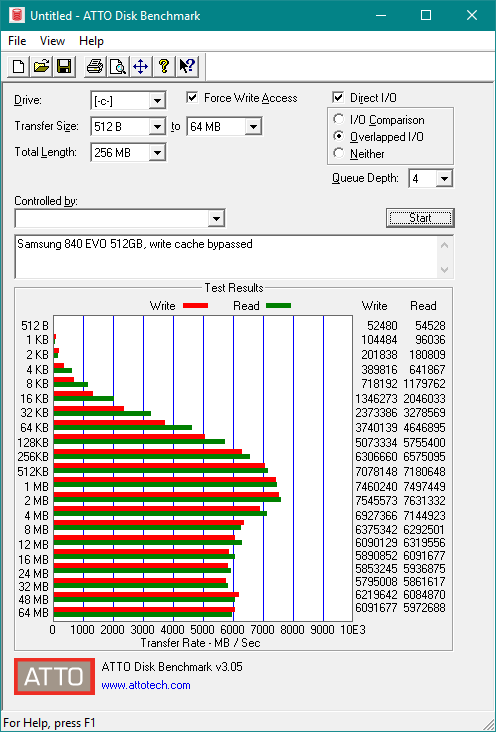

You may have noticed the checkbox Force Write Access. If that is checked, ATTO Disk Benchmark bypasses the write cache. The same test above with Force Write Access checked is:

As you can see, with the write cache bypassed, reads generally are faster than writes (although not by a lot and not always). The SDD is still able to compress the data before writing it, but the effect of bypassing the cache is that both reads and writes are slower than when it is enabled. (I am not sure how ATTO Disk Benchmark bypasses the cache, but the results support that it does have the expected effect.)

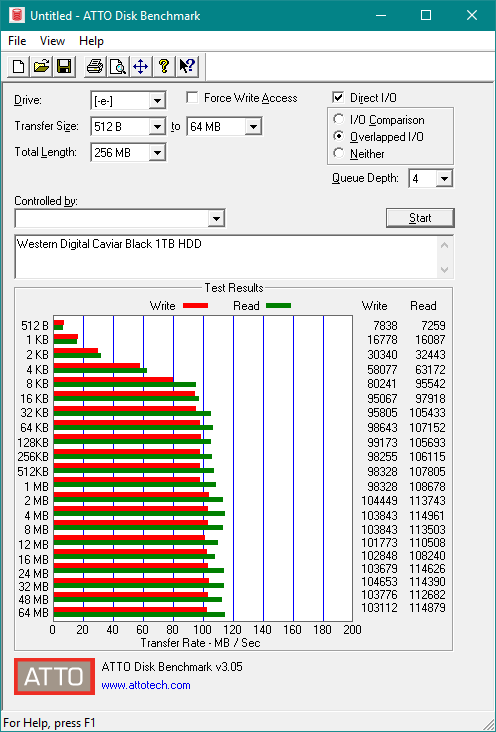

Using ATTO Disk Benchmark on an HDD (without Force Write Access set), I get the following:

The hard drive above is a Western Digital Caviar Black 1TB drive. HDDs like this one don’t compress the data before writing. Notice that once we pass block sizes above 4KB, not only are reads faster than writes, there is never a time when writes beat reads. There is no reason HDDs couldn’t compress blocks, but they have many more write cycles before they wear out (relative to SDDs) and they are cheap to manufacture (as-is) so the impetus is not there. It may be that there is little cost benefit in going back and modifying the well-established HDD controller technology. Also notice, that unlike SDDs which tend to have a bell-shaped performance curve, HDDs tend to plateau at some point and stay there.

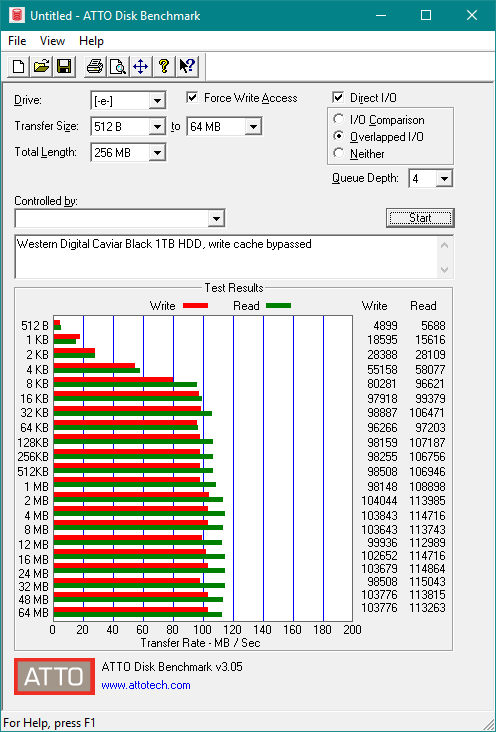

And just for completeness, the HDD test with Force Write Access set:

The above shows that for the ATTO Disk Benchmark application at least, bypassing (or enabling) the write cache doesn’t have the same level of effect that it has on SDDs. For small block sizes, the effect is noticeable, but at larger sizes, there is often little difference. I surmise that a good portion of the blocks written out during everyday use are smaller than 4K, so write caches help.